Tired of OpenAI's limitations for private data and eager to experiment with RAG on my own terms, I dove headfirst into a holiday quest: building a local, OpenAI-free RAG application. While countless tutorials guide Full Stack development, the "AI" magic often relies on OpenAI APIs, leaving private data concerns unresolved. So, fueled by frustration and holiday spirit, I embarked on a journey to forge my own path, crafting a RAG that would sing offline, on my own machine.

This post shares the hard-won wisdom from my quest, hoping to guide fellow explorers building RAGs in their own kingdoms. Buckle up, and let's delve into the challenges and triumphs of this offline adventure!

Retrieval-Augmented Generation (RAG) in Controlled Environments

There are several advantages to running a Large Language Model (LLM), Vector Store, and Index within your own data center or controlled cloud environment, compared to relying on external services:

- Data control: You maintain complete control over your sensitive data, eliminating the risk of unauthorized access or leaks in third-party environments.

- Compliance: Easily meet compliance requirements for data privacy and security regulations specific to your industry or region.

- Customization: You can fine-tune the LLM and index to be more secure and privacy-preserving for your specific needs.

- Integration: Easier integration with your existing infrastructure and systems.

- Potential cost savings: Although initial setup might be higher, running your own infrastructure can be more cost-effective in the long run, especially for high-volume usage.

- Predictable costs: You have more control over budgeting and avoid unpredictable scaling costs of external services.

- Independence: Reduced reliance on external vendors and potential risks of vendor lock-in.

- Innovation: Facilitates research and development of LLMs and applications tailored to your specific needs.

- Transparency: You have full visibility into the operation and performance of your LLM and data infrastructure.

Traditionally, training a base model is the most expensive stage of AI development. This expense is eliminated by using a pre-trained language model (LLM), as proposed in this post. Owning and running this setup will incur costs comparable to any other IT application within your organization. To illustrate, the sample application below runs on a late-2020 Macbook Air with an M1 chip and generates responses to queries within 30 seconds.

Let's look at a RAG application and its data integration points before we identify potential points of sensitive data leakage.

When using a RAG pipeline with an external API like OpenAI, there are several points where your sensitive data could potentially be compromised. Here are some of the key areas to consider:

Data submitted to the API:

- Query and context: The query itself and any additional context provided to the API could contain personally identifiable information (PII) or other sensitive data.

- Retrieved documents: If the RAG pipeline retrieves documents from an corporate knowledge base, those documents might contain PII or sensitive information that gets incorporated into the Index, and transmitted to the external LLM API to generate the answer.

Transmission and storage:

- Communication channels: Data transmitted between your system and the external API might be vulnerable to interception if not properly secured with encryption protocols like HTTPS.

- API logs and storage: The external API provider might store logs containing your queries, contexts, and retrieved documents, which could potentially be accessed by unauthorized individuals or leaked in security breaches.

Model access and outputs:

- Model access control: If the external API offers access to the underlying LLM model, it's crucial to ensure proper access controls and logging to prevent unauthorized use that could potentially expose sensitive data.

- Generated text: Be aware that the LLM might still include personal information or sensitive content in its generated responses, even if the query itself didn't explicitly contain it. This can happen due to biases in the LLM's training data or its imperfect understanding of context.

The quest for private, accurate and efficient search has led me down many winding paths, and recently, three intriguing technologies have emerged with the potential to revolutionize how we interact with information: LlamaIndex, Ollama, and Weaviate. But how do these tools work individually, and how can they be combined to build a powerful Retrieval-Augmented Generation (RAG) application? Let's dive into their unique strengths and weave them together for a compelling answer.

1. llamaindex: Indexing for Efficiency

Imagine a librarian meticulously filing away knowledge in an easily accessible system. That's essentially what LlamaIndex does. It's a lightweight, on-premise indexing engine that excels at extracting dense vector representations from documents like PDFs, emails, and code. It operates offline, ensuring your data remains secure and private. Imagine feeding LlamaIndex a corpus of scientific papers – it would churn out a dense index, ready for lightning-fast searches.

2. Ollama: Run any LLM in your local machine

But simply finding relevant documents isn't enough. We need to understand what's inside them. That's where Large Language Models (LLMs) shine.We can take LlamaIndex's vectors and extract the most informative snippets related to a query. Think of it as a skilled annotator summarizing key points. LLMs delve into the document, pinpointing sections that answer your questions or resonate with your search terms.

Ollama runs LLMs in your local machine. The library of LLMs it can run grows daily. Why limit ourselves to one organisation's LLM (ie: OpenAI), when we can pick and choose whatever model that fits our project?

3. Weaviate: Graphing Connections for Insight

Now, imagine these extracted snippets floating in a vast ocean of information. To truly grasp their significance, we need to connect them. Enter Weaviate – an open source, AI-native vector database that helps developers create intuitive and reliable AI-powered applications.

With Weaviate, we can not only find relevant documents but also understand how they connect to each other, uncovering hidden patterns and relationships. Think of it as an expert cartographer mapping the knowledge landscape, revealing a web of connected insights.

The Anatomy of a RAG Application

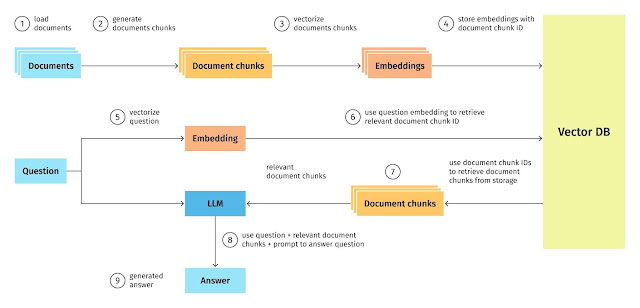

Imagine a magic search engine for your private data. That's essentially what a Retrieval-Augmented Generation (RAG) application does. Here's how it works:

- Indexing Secrets: It starts by indexing all your confidential information, much like a librarian meticulously shelves books. This "index" serves as a map to your knowledge treasure trove.

- Turning Words into Numbers: Each piece of information gets transformed into a special code-like number called a "vector." This lets the computer understand the meaning and relationships between different data points.

- Smart Prompting: When you ask a question, the RAG doesn't just search blindly. It crafts a clever "prompt" that combines your query with relevant information from the index. Think of it as a detailed map pointing the Large Language Model (LLM) to the best answer within your private data.

- Consulting the Oracle: The LLM, like a powerful AI wizard, receives the prompt and dives into your indexed data, guided by the map. It then crafts a comprehensive and cohesive response tailored to your specific question.

The image below is a good representation of the process flow of a RAG application (click to enlarge).

Source: https://blog.griddynamics.com/retrieval-augmented-generation-llm

So, instead of relying on external sources that might not have your confidential information or understand its context, a RAG application lets you unlock the hidden riches within your own data, right on your own machine.

The end of my quest ...

Once I managed to run my own end-to-end RAG application in my local machine, I decided to post the code and insights to GitHub. I committed two new repositories;

1. Retrieval-Augmented Generation (RAG) Bootstrap Application

Code: https://github.com/tyrell/llm-ollama-llamaindex-bootstrap

I developed this application as a template for my upcoming projects. The application operates independently and is based on a tutorial by Andrej Baranovskij. I chose his tutorial because it not only matched my goals but also executed successfully on the first attempt. I forked his original repository and customized the application according to my preferences. Additionally, I included extensive comments in the code to serve as reminders for myself and to assist newcomers in understanding the codebase.

2. Retrieval-Augmented Generation (RAG) Bootstrap Application UI

Code: https://github.com/tyrell/llm-ollama-llamaindex-bootstrap-ui